Let's Balance the load Part 1

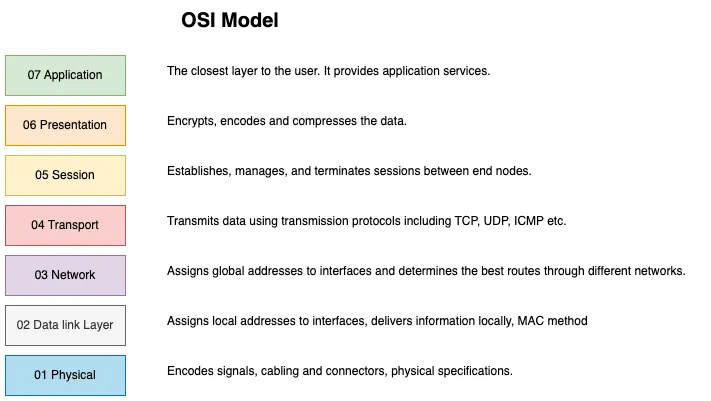

Imagining load balancer at each layer of OSI model.

Need for Load Balancers

In the ever-expanding digital landscape, the number of internet users has grown from 416 million in the year 2000 to 4.70 billion in the year 2020 as per ourworldindata.org data. In just two decades we saw an increase of internet users by 11 times. This surge in user base presents numerous challenges, one of which is the immense load placed on web servers serving these users.

Servers, with their limited capacity, struggle to keep up with the growing demands. A single server can only catter to limitted amount of users only leaving many users with a poor experience. To solve this problem tech genius have applied many methods like regional servers, local websites, peer-to-peer networking, private clouds, VPNs etc.

One of the most commonly used method is Load Balancing. It distributes incoming network traffic across multiple servers, thereby improving the performance and reliability of the system. You can think of it as a traffic police directing cars to different lanes so that no single lane gets congested.

Imagining Load Balancers in OSI Model

Load can be balanced at each layer of the OSI model.

Now lets see how each we can build a load balancer at each layer:

Physical Layer

Let’s consider a blogging website with 2 servers and each server is capable of handling only 100 users in total. So our total capacity is 200 users. Our system will not be able to handle 201st user as we don’t have enough capacity. We can connect 100 users to the first server and the remaining 100 users to the second server directly using physical data lines. This way we have balanced the load at the physical layer.

Cons:

- We need to create 2 physical networks.

- The load is getting managed manually.

- Lots of network cables will be required.

- New user addition needs manual intervention.

Data link Layer

Now Lets take a different example where our single server is capable of handling 100 users but our network is only capable of handling traffic from 50 users at a time. To handle such kind of scenario we can have multiple network interfaces on our single server and basically aggregate the 2 networks into one achieving link aggregation.

Cons:

- We need more hardware.

- CPUs can handle limited number of Network interface cards becuase of limited PCI buses available on CPUs.

- Scaling becomes harder with increasing number of interfaces.

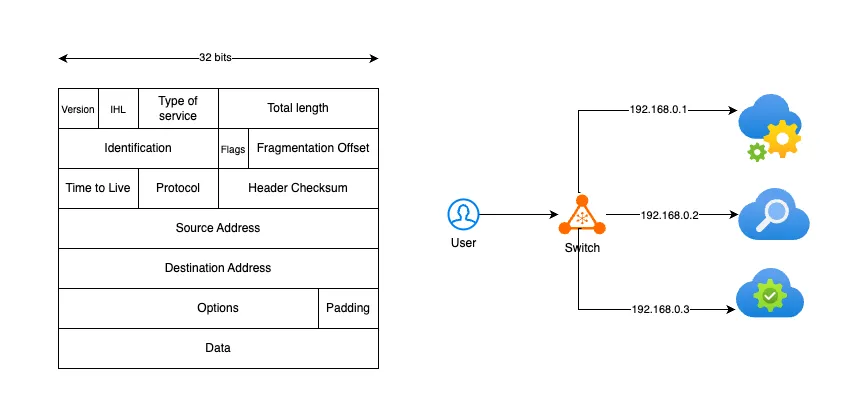

Network Layer

Network layer manages the routes and address of NIC cards. Here we can use IP address and routing tables to route the traffic to different servers based on IP address of the client machine. In IPv4 we have limited number of Public IP addresses. We use Network Address Translation (NAT) to use private IP addresses for internal networks and create routes based on our nextwork topology.

Load Balancer at Network Layer

Load Balancer at Network Layer

Cons:

- Routing tables can become complex with increasing number of routes.

- Client/Stream stickyiness is required as we are network packets need to go to same destination server in order to transmit whole data.

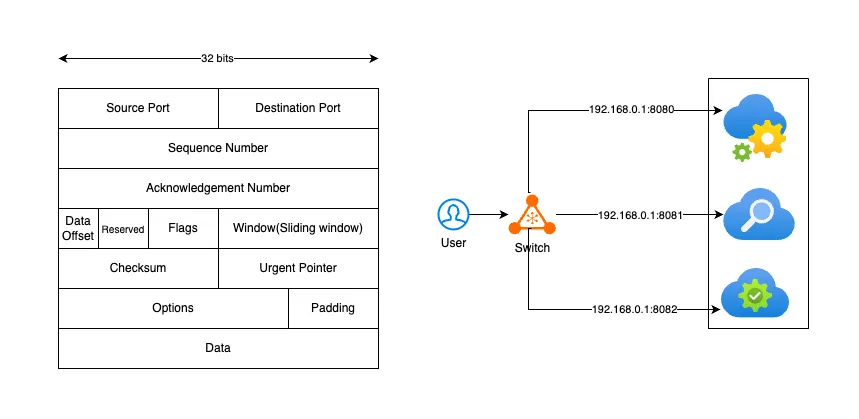

Transport Layer

Transport layer handles the protocol used to transmit data between Client and Server. Here we have port number which can be used to distribute the load among multiple services on same machine.

Load Balancer at Transport Layer

Load Balancer at Transport Layer

Cons:

- We have limited number of ports available on a machine or network interface.

Session Layer

Next we have session layer which is responsible for managing the session between client and server. In TCP/IP stack, this layer would be handling the sockets. Sockets are special files in computers. They are created when a client connect to a server on a port. These files can be shared among multiple processes on the system.

Cons:

- We can create/open limited number of sockets on a given interface.

Presentation Layer

For balancing the load at this layer, We need to understand the tasks performed by Persentation layer. Persentation layer is responsible for formatting, encrypting and encoding the data before it is sent over the network. Here we can employ specialized chips or services to offload these tasks from our main server.

Cons:

- Offloading may increase system laytency.

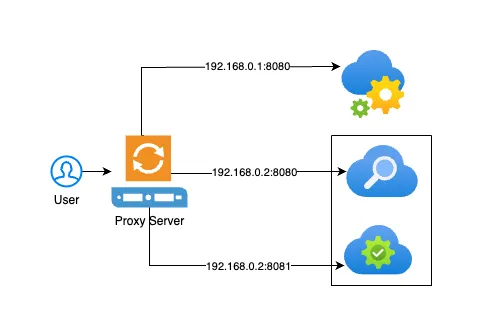

Application Layer

Application layer is the topmost layer in OSI stack. We have protocols like HTTP, FTP, SMTP etc at this layer. We can create intelligent proxies at this layer to delegate the requests to different servers as this layer knows all the application protocols.

Load Balancer at Application Layer

Load Balancer at Application Layer

Cons:

- Each protocol at this layer is different and we need to create different proxies for each protocol.

- Protocol limitation is a big issue at this layer. for e.g. a lot of HTTP proxies have started supporting other protocols like FTP and HTTP protocol might not be able to support all features of other protocols.

Types of Load Balancers

There are many ways to balance the load on the servers. But broadly they can be categorized into three types:

- L3/L4 - Network load balancer: This is a combination of IP and Port based load balancing. For given Source IP and Port it can route to a specific Destination IP and Port. Checkout part 2 of this blog to create a L3/L4 Load Balancer using IP Tables.

- L7 - Application load balancer: This is based on protocols at Application layer. Mostly it uses Proxy servers liks HTTP to route the traffic to upstream servers. It can route based on URL, cookies, headers etc as well since it has capability of extracting these details from the request. Checkout part 3 of this blog to create a L7 Load Balancer using Nginx Proxy.

- Global server load balancer: This type of load balancer uses a combination of other 2 type of load balancers to distribute the traffic across multiple data centers and regions across geographics.

Takeaways

- Load balancers need stateless applications so that one request can be processed by one server and next could be by another server.

- Few load balancers need special hardware and could become expensive to scale.

- We need to find a balance between load balancing different solutions like l3/l4 and l7.